Beyond the Chatbot: Why I’m Betting My Career on the Google AI Stack

By Ben White | Part 1 of the "Agentic Architect" Series

In 2023, we were amazed when a computer could write a haiku. In 2024, we built "wrappers"—thin UIs around API calls. In 2025, the industry hit a wall: chatbots were cool, but they couldn't work. They hallucinated, they got stuck in loops, and they couldn't integrate with legacy systems without massive friction.

Welcome to 2026. The era of the Chatbot is dead. The era of the Agent is here.

As I look at the fragmented landscape of AI tools—from the open-source chaos of LangChain to the closed gardens of OpenAI—I’ve made a strategic decision. I am doubling down on the Google AI ecosystem.

This isn’t just brand loyalty. It’s an architectural bet. Here is why the Google stack (Gemini, Vertex AI, ADK) is the only platform currently capable of supporting the full lifecycle of true agentic application development.

The "Franken-stack" Problem

If you are building AI apps today without an integrated ecosystem, your architecture likely looks like a "Franken-stack":

Model: OpenAI (for reasoning)

Vector DB: Pinecone (for memory)

Framework: LangChain (for orchestration)

Hosting: AWS Lambda (for compute)

Monitoring: Something custom you cobbled together.

This works for prototypes. It fails in production. Latency kills you between services. Debugging a hallucination across three different vendor logs is a nightmare. And security? Good luck.

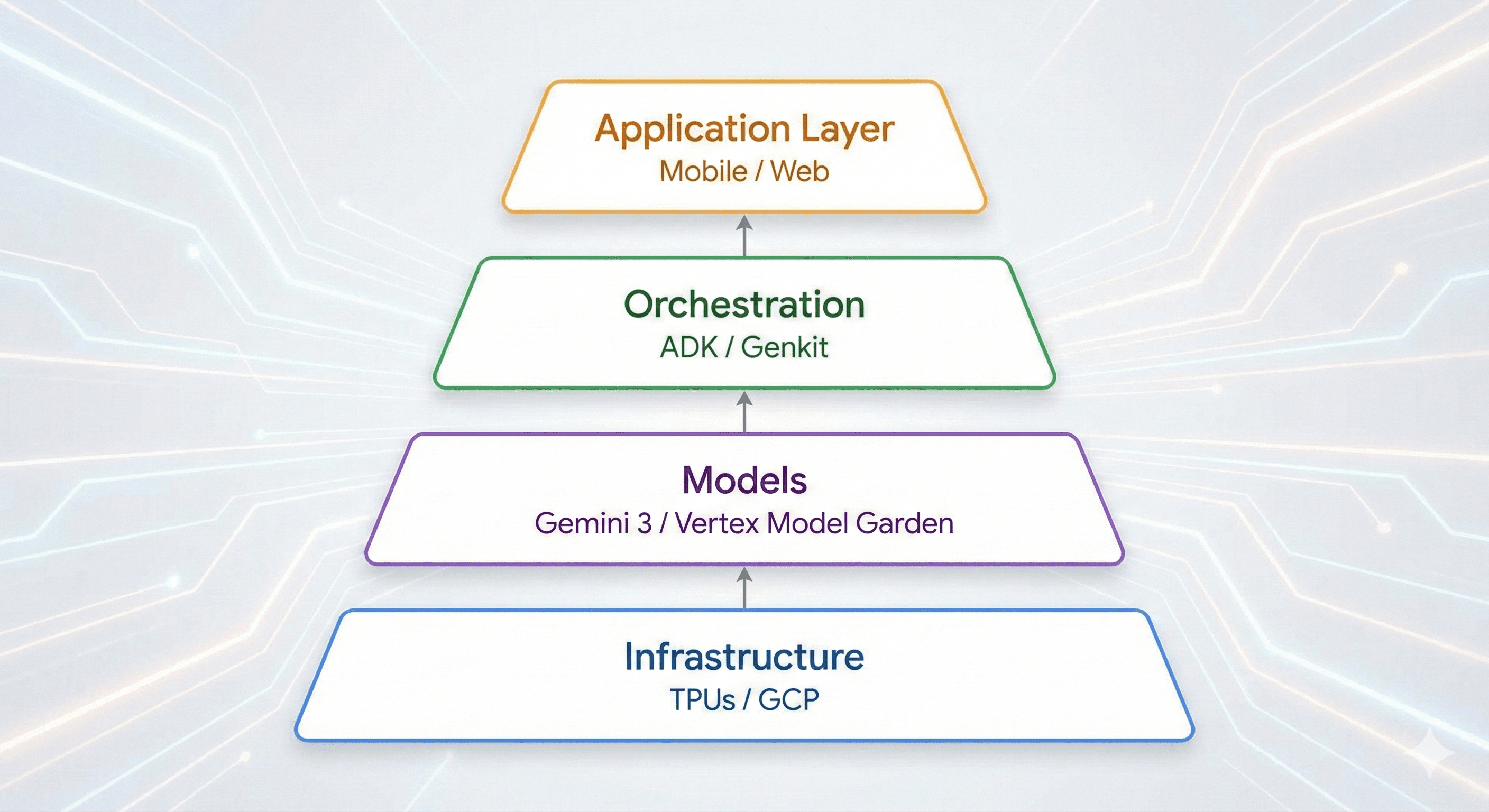

The Unified Theory: How the Google Stack Fits Together

The reason I’m focusing my journey here is that Google has solved the integration layer. It allows me to stop being a "plumber" connecting APIs and start being an "architect" designing agents.

Here is the stack I will be dissecting over the next 12 part series:

1. The Brain: Gemini 3 & Multimodality

We have moved past "text-in, text-out." The Gemini 3 family (Pro and Flash) offers native multimodality.

Why it matters: I don't need a separate transcription service (like Whisper) or a vision model. I can stream raw video and audio directly into Gemini 3 Pro, and it "sees" and "hears" in real-time. This reduces latency by hundreds of milliseconds—critical for voice agents.

2. The Skeleton: Agent Development Kit (ADK)

This is the game-changer. Late last year, Google solidified the Agent Development Kit (ADK).

The shift: Unlike generic frameworks, ADK is opinionated. It treats "Tools" and "Agents" as first-class citizens with typed contracts. It allows us to build hierarchical agent systems (a Manager Agent overseeing a Coder Agent) with type safety that Python developers dream of.

3. The Muscle: Firebase Genkit

For the "last mile" of development—getting AI into a mobile app—Firebase Genkit is unmatched.

The use case: If I need a feature on a user's phone, I don't want to spin up a Kubernetes cluster. Genkit allows me to deploy AI flows to Cloud Functions in one command, with built-in evaluation tools.

4. The Nervous System: Vertex AI & Grounding

This is where the enterprise value lives.

Grounding: The biggest killer of AI adoption is "lying." Vertex AI’s Grounding with Google Search service allows my agents to fact-check themselves against the live internet (or my own enterprise data) before responding. It isn't just RAG (Retrieval Augmented Generation); it's RAG with the world's best search index.

The Roadmap: From Zero to Expert

This article marks the start of a 12-part series where we will not just "talk" about these tools—we will build with them.

We are going to move beyond 'Hello World.' We are going to build:

Rapid Prototypes: Grounded research agents built in 15 minutes using Vertex AI Agent Builder (No-Code).

Production Systems: Porting that logic to the Vertex AI SDK for full Python control and orchestration.

Real-World RAG: Comparing 'Easy Button' enterprise search against custom vector pipelines.

Mobile Deployments: Shipping our agents to the edge using Firebase Genkit.

The gap between 'AI Enthusiast' and 'AI Developer' is the ability to choose the right tool for the right problem. Sometimes that is a Python script; sometimes it is a drag-and-drop console. We will master both.

The tools are ready. The models are ready.

Now, let's build.

Next Month:

15 Minutes to Autonomy: Building Your First Agent with Vertex AI Agent Builder

Build a fully grounded, production-grade research agent in 15 minutes using Vertex AI Agent Builder.